I want to implement fractional Laplacian operator (- \Delta)^{s}. Consider the example problem ( -\Delta)^{s} u(x)= f(x). For f(x)= cos (2 \pi x ), we have the exact solution u_{exact}(x)=cos(2 \pi x)/(2 \pi)^{2 s}.

Below is a code for above example problem that I came up using GitHub - MiroK/hsmg: multigrid for fractional Laplacian. Numerically obtained u(x) and exact solution u_{exact}(x) are matching.

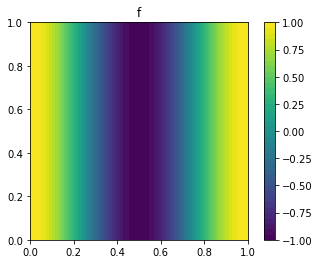

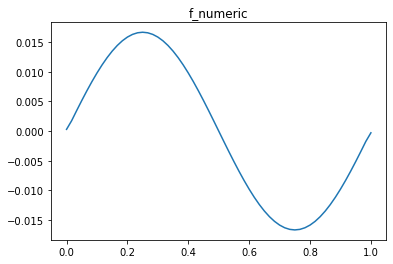

However, numerically obtained f_{numeric}(x) by applying ( -\Delta)^{s} operator on u(x) does not match with the known f(x) (see plots).

(I am using Dirichelt boundary condition as an example for obtaining preconditioner matrix B.)

How to correctly implement fractional Laplacian operator?

from dolfin import *

from hsmg.hseig import HsNorm

from hsmg.hsquad import InvFLaplace

from block.iterative import ConjGrad

s=0.4 # fractional order of differentiation

n=30 # mesh size

mesh = UnitSquareMesh(n, n)

V = FunctionSpace(mesh, "CG", 1)

#### forcing function f in RHS

f_expression = Expression('cos(2*pi*x[0])', degree=2)

f= interpolate(f_expression, V)

#### Exact solution u_exact

u_exact_expression = Expression(' cos(2*pi*x[0]) / pow(2*pi, 2*s) ', s=s, degree=2)

u_exact= interpolate(u_exact_expression, V)

#### numerical solution uh

A = HsNorm(V, s)

bcs= DirichletBC(V, Constant(0), 'on_boundary')

B = InvFLaplace(V, s, bcs, compute_k=0.5)

Ainv = ConjGrad(A, precond=B, tolerance=1E-10)

b = assemble(inner(TestFunction(V), f)*dx)

uh = Function(V, Ainv*b)

#### numerically evaluated f

b2 = assemble(inner(TestFunction(V), uh)*dx)

f_numeric= Function(V, A*b2)

print( errornorm(f, f_numeric, 'L2') )